Once post production work has to be done by more than one person at a time, the speed of the network becomes an important consideration. This is because it is much more efficient if editors, motion graphics artists and colour graders can get access to the same video source files and most up-to-date edits. This is done with shared storage connected to computers by a fast network.

Also for many years people have connected multiple computers together to perform complex tasks. In post production more and more computing is being done using advanced GPUs. Multiple computers combined to perform complex tasks together are known as render farms. The faster the connections between the computers, the better.

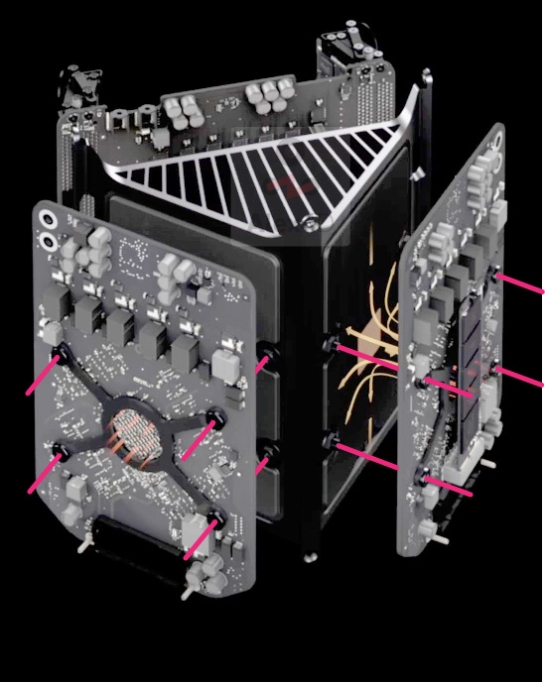

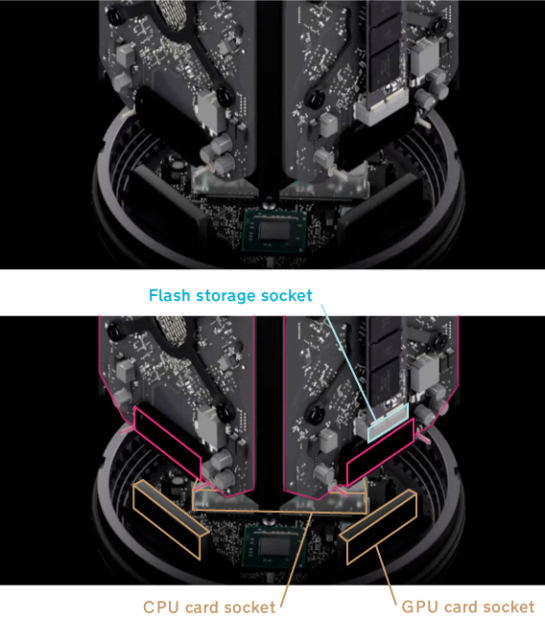

Current Mac Pros can have PCIe network cards installed, and those cards can be used with Thunderbolt-equipped Macs using an expansion chassis. However other Macs don’t have fast network connections built in and can’t use PCIe cards.

According to FAQ-MAC a feature of Apple’s forthcoming Mac OS X 10.9 Mavericks might allow many more Macs be used in simple render farms: IP over Thunderbolt.

They showed a dialog box (which they may have mocked up) that shows Mavericks asking whether a newly attached Thunderbolt cable should be used as a network connection:

Detected a new network interface:

Thunderbolt Bridge

Check that it is configured correctly, and then click Apply to activate.

Internet Protocol over Thunderbolt means that you can connect Macs via Thunderbolt cables and use the Thunderbolt cable as a network connection. Thunderbolt 1 connections have a theoretical maximum transfer rate of 10 Gb/s – which is similar to the speed of 10 gigabit Ethernet, which is a popular post production networking standard.

I assume IP over Thunderbolt is less efficient than a dedicated Fibre Channel PCI Express card, but at least Thunderbolt is available on a wide range of Macs.

With a little distributed rendering, my 27” iMac connected to a pair of Thunderbolt equipped Macs will get through QuickTime encodes much more quickly

Also if I need to share 4K proxies with others, Thunderbolt over IP is good news.